What did we learn in assessing Trustworthy AI in practice?

If you are interested and have time you can watch this video.

It will give you an overview of our research work in the last 2.5 years, in assessing trustworthy AI in healthcare in practice:

What did we learn in assessing Trustworthy AI in practice?

Roberto V. Zicari, Z-Inspection® Initiative

AI Ethics online–Chalmers, April 20, 2021

YouTube: https://www.youtube.com/watch?v=Jt63ZUbrBJM

Download Copy of the Presentation: https://z-inspection.org/wp-content/uploads/2021/04/Zicari.CHALMERSApril20.2021.pdf

For any questions and/or ideas of possible collaboration, please do not hesitate to contact me.

Stay safe

Roberto

…………………………………….

Prof. Roberto V. Zicari

Z-Inspection® Initiative

Affiliated Professor, Yrkeshögskolan Arcada, Helsinki

Adjunct Professor, Seul National University, South Korea

길이 깁니다

The legislative proposal for AI by the European Commission has been published today.

The highly anticipated legislative proposal for AI by the European Commission has been published today.

Read the EU Regulatory Proposal on AI:

EU Press Release

Press release 21 April 2021 Brussels

Europe fit for the Digital Age: Commission proposes new rules and actions for excellence and trust in Artificial Intelligence

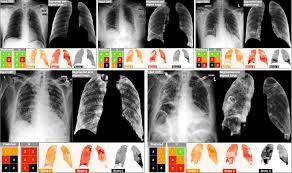

Kick off Meeting (April 15, 2021) Assessing Trustworthy AI. Best Practice: Deep Learning for predicting a multi-regional score conveying the degree of lung compromise in COVID-19 patients. In cooperation with Department of Information Engineering and Department of Medical and Surgical Specialties, Radiological Sciences, and Public Health – University of Brescia, Brescia, Italy

On April 15, 2021 we had a real great kick off meeting for this use case:

Assessing Trustworthy AI. Best Practice: Deep Learning for predicting a multi-regional score conveying the degree of lung compromise in COVID-19 patients.

71 experts from all over the world attended.

Worldwide, the saturation of healthcare facilities, due to the high contagiousness of Sars-Cov-2 virus and the significant rate of respiratory complications is indeed one among the most critical aspects of the ongoing COVID-19 pandemic

The team of Alberto Signoroni and colleagues implemented an end-to-end deep learning architecture, designed for predicting, on Chest X-rays images (CXR), a multi-regional score conveying the degree of lung compromise in COVID-19 patients.

We will work with Alberto Signoroni and his team and apply our Z-inspection® process to assess the ethical, technical and legal implications of using Deep Learning in this context.

For more information: https://z-inspection.org/best-practice-deep-learning-for-predicting-a-multi-regional-score-conveying-the-degree-of-lung-compromise-in-covid-19-patients/

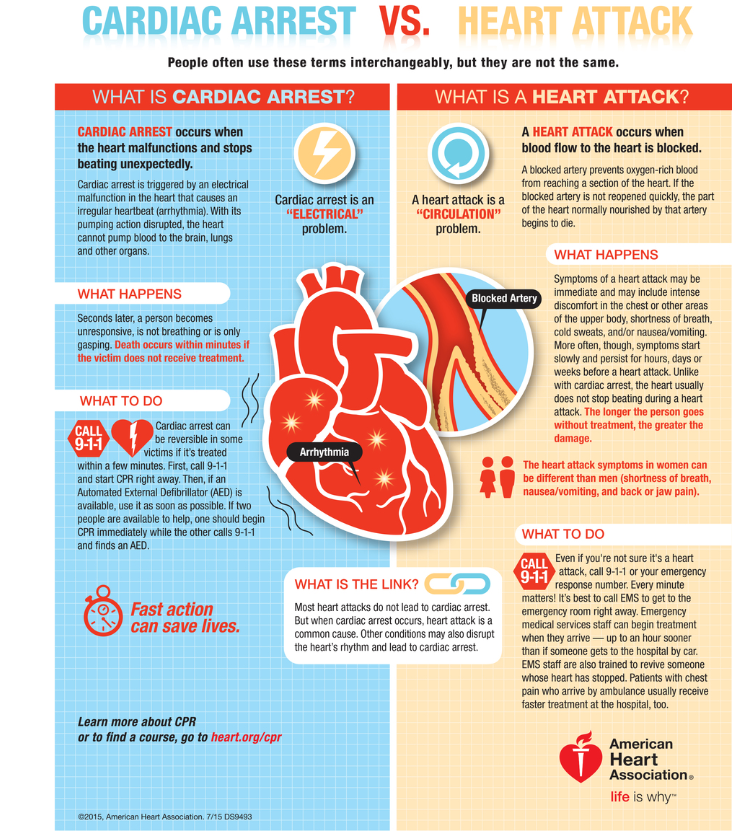

This AI detects cardiac arrests during emergency calls

Jointly with the Emergency Medical Services Copenhagen, we completed the first part of our trustworthy AI assessment.

A ML sytem is currently used as a supportive tool to recognize cardiac arrest in 112 emergency calls.

A team of multidisciplinary experts used Z-Inspection® and

identified ethical,technical and legal issues in using such AI system.

This confirms some of the ethical concern raised by Kay Firth-Butterfield, back in June 2018….

This is another example of the need to test and verify algorithms,” says Kay Firth-Butterfield, head of Artificial Intelligence and Machine Learning at the World Economic Forum.

“We all want to believe that AI will ‘wave its magic wand’ and help us do better and this sounds as if it is a way of getting AI to do something extremely valuable.

“But,” Firth-Butterfield added, “it still needs to meet the requirements of transparency and accountability and protection of patient privacy. As it is in the EU, it will be caught by GDPR, so it is probably not a problem.” However, the technology raises the fraught issue of accountability, as Firth-Butterfield explains. “Who is liable if the machine gets it wrong? the AI manufacturer, the human being advised by it, the centre using it? This is a much debated question within AI which we need to solve urgently: when do we accept that if the AI is wrong it doesn’t matter because it is significantly better than humans. Does it need to be a 100% better than us or just a little better? At what point is the use, or not using this technology negligent?”

Source: https://www.weforum.org/agenda/2018/06/this-ai-detects-cardiac-arrests-during-emergency-calls/

Image: CPR

The full report is submitted for publication. Contact me if you are interested to know more. RVZ

Resources:

– Article World Economic Forum, 06 Jun 2018.

Download the Z-Inspection® Process

– “Z-Inspection®: A Process to Assess Ethical AI”

Roberto V. Zicari, John Brodersen, James Brusseau, Boris Düdder, Timo Eichhorn, Todor Ivanov, Georgios Kararigas , Pedro Kringen, Melissa McCullough, Florian Möslein, Karsten Tolle, Jesmin Jahan Tithi, Naveed Mushtaq, Gemma Roig , Norman Stürtz, Irmhild van Halem, Magnus Westerlund.

IEEE Transactions on Technology and Society, 2021

Print ISSN: 2637-6415

Online ISSN: 2637-6415

Digital Object Identifier: 10.1109/TTS.2021.3066209

DOWNLOAD THE PAPER

The Z-Inspection® Process is available for Download!

The Z-Inspection® Process is available for Download! “Z-Inspection®: A Process to Assess Ethical AI”Roberto V. Zicari, John Brodersen, James Brusseau, Boris Düdder, Timo Eichhorn, Todor Ivanov, Georgios Kararigas , Pedro Kringen, Melissa McCullough, Florian Möslein, Karsten Tolle, Jesmin Jahan Tithi, Naveed Mushtaq, Gemma Roig , Norman Stürtz, Irmhild van Halem, Magnus Westerlund.IEEE Transactions on Technology and […]

Professor SIBRAND POPPEMA, MEMBER OF THE Z-Inspection® ADVISORY BOARD, RECEIVES GERMAN ORDER OF MERIT.

Award ceremony with Ambassador Dr. Peter Blomeyer

Photo: Sunway University

Naveed Mushtaq. May he rest in peace.

Our team member, colleague and friend Naveed Mushtaq has passed away last night. His heart did not make it.

He suffered a sudden cardiac arrest a few weeks ago.

May he rest in peace.

We pray for the family, that God will grant them the serenity in this very difficult time in their life.

What a Philosopher Learned at an AI Ethics Evaluation

Our expert James Brusseau (PhD, Philosophy) – Pace University, New York City, USA– wrote an essay documenting the learnings he acquired from working on Z-Inspection® performed on an existing, deployed, and functioning AI medical device.

What a Philosopher Learned at an AI Ethics Evaluation

AI ethics increasingly focuses on converting abstract principles into practical action. This case study documents nine lessons for the conversion learned while performing an ethics evaluation on a deployed AI medical device. The utilized ethical principles were adopted from the Ethics Guidelines for Trustworthy AI, and the conversion into practical insights and recommendations was accomplished by an independent team composed of philosophers, technical and medical experts.

This essay contributes to the conversion of abstract principles into concrete artificial intelligence applications by documenting learnings acquired from a robust ethics evaluation performed on an existing, deployed, and functioning AI medical device.

The ethics evaluation formed part of a larger inspection involving technical and legal aspects of the device that was organized by computer scientist Roberto Zicari (2020). This document is limited to the applied ethics, and to his experience as a philosopher.

These are nine lessons I learned about applying ethics to AI in the real world.

Zicari, Roberto (2020). Z-Inspection: A process to assess trustworthy AI.